[ad_1]

Meta has a short while ago produced LLaMA, a collection of foundational massive language products ranging from 7 to 65 billion parameters.

LLaMA is creating a large amount of exhilaration mainly because it is more compact than GPT-3 but has better efficiency. For case in point, LLaMA’s 13B architecture outperforms GPT-3 even with remaining 10 instances lesser. This new selection of essential versions opens the door to speedier inference overall performance and chatGPT-like authentic-time assistants although becoming charge-efficient and managing on a solitary GPU.

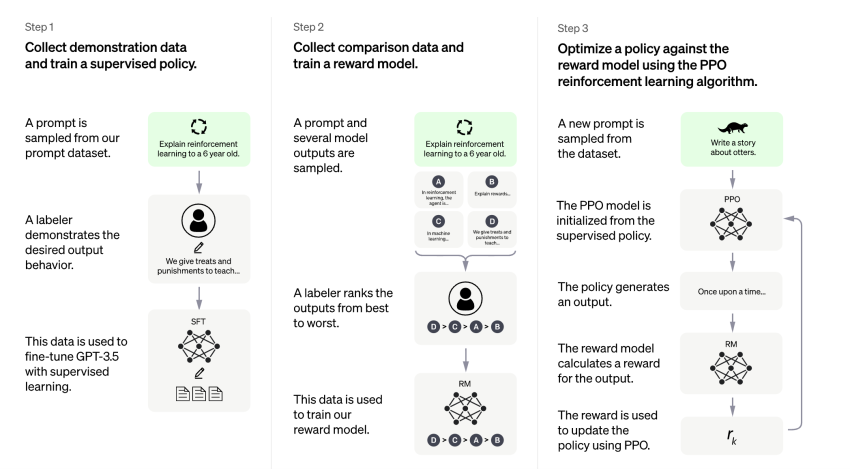

Nonetheless, LLaMA was not high-quality-tuned for instruction responsibilities with a Reinforcement Learning from Human Opinions (RLHF) coaching system.

The good news is that currently Nebuly has launched ChatLLaMA, the first open up-supply implementation of LLaMA based mostly on RLHF:

- A full open-supply implementation that permits you to establish a ChatGPT-style services based on pre-experienced LLaMA types.

- As opposed to the first ChatGPT, the training approach and single-GPU inference are significantly a lot quicker and less costly by taking benefit of the lesser dimensions of LLaMA architectures.

- ChatLLaMA has designed-in assistance for DeepSpeed ZERO to velocity up the fine-tuning system.

- The library also supports all LLaMA design architectures (7B, 13B, 33B, 65B), so that you can high-quality-tune the design according to your tastes for training time and inference general performance.

If you like the venture, remember to take into account leaving a star on the GitHub repository

https://github.com/nebuly-ai/nebullvm/tree/main/apps/accelerate/chatllama

ChatLLaMA allows you to simply coach LLaMA-primarily based architectures in a equivalent way to ChatGPT working with RLHF. For instance, underneath is the code to commence the coaching in the situation of ChatLLaMA 7B.

from chatllama.rlhf.coach import RLTrainer

from chatllama.rlhf.config import Config

route = "path_to_config_file.yaml"

config = Config(route=path)

coach = RLTrainer(config.coach)

trainer.distillate()

coach.coach()

coach.coaching_stats.plot()

Observe that you should really give Meta’s authentic weights and your tailor made dataset prior to starting up the good-tuning course of action. Alternatively, you can create your very own dataset applying LangChain’s agents.

python deliver_dataset.py

Nebuly has open up-sourced the entire code to replicate the ChatLLaMA implementation, opening up the likelihood for each consumer to good-tune their have personalised ChatLLaMA assistants. The library can be further prolonged with the pursuing additions:

- Checkpoints with wonderful-tuned weights

- Optimization techniques for more quickly inference

- Guidance for packaging the design into an economical deployment framework

All developers are invited to sign up for Nebuly’s attempts towards a lot more successful and open ChatGPT-like assistants.

You can take part in the subsequent techniques:

- Submit an situation or PR on GitHub

- Be a part of their Discord team to chat

Note: Thanks to Nebuly’s group for the considered management/ Educational write-up above.

Asif Razzaq is the CEO of Marktechpost, LLC. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the opportunity of Synthetic Intelligence for social very good. His most recent endeavor is the start of an Artificial Intelligence Media System, Marktechpost, which stands out for its in-depth coverage of machine finding out and deep learning information that is the two technically audio and quickly easy to understand by a extensive audience. The system offers of around a million month-to-month sights, illustrating its reputation among the audiences.

[ad_2]

Supply url