[ad_1]

The reputation and usage of Substantial Language Models (LLMs) are frequently booming. With the huge good results in the field of Generative Artificial Intelligence, these designs are primary to some massive financial and societal transformations. One of the greatest examples of the trending LLMs is the chatbot produced by OpenAI, called ChatGPT, which imitates human beings and has experienced tens of millions of end users given that its release. Created on Pure Language Processing and Organic Language Knowledge, it solutions concerns, generates exceptional and resourceful content, summarizes lengthy texts, completes codes and emails, and so on.

LLMs with a big quantity of parameters demand a large amount of computational electric power, to minimize which efforts have been produced by using strategies like design quantization and community pruning. Even though product quantization is a process that lessens the little bit-amount representation of parameters in LLMs, community pruning, on the other hand, seeks to lessen the measurement of neural networks by eradicating unique weights, thereby putting them to zero. The absence of focus on pruning LLMs is predominantly owing to the hefty computational sources needed for retraining, coaching from scratch, or iterative procedures in latest approaches.

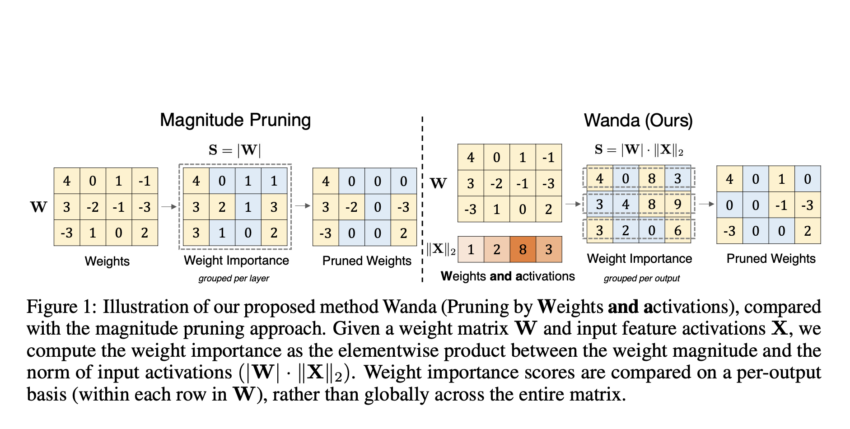

To get over the constraints, researchers from Carnegie Mellon College, Truthful, Meta AI, and Bosch Centre for AI have proposed a pruning approach known as Wanda (pruning by Weights AND Activations). Encouraged by the investigate that LLMs display screen emergent huge-magnitude characteristics, Wanda induces sparsity in pretrained LLMs with no the have to have for retraining or pounds updates. The smallest magnitude weights in Wanda are pruned dependent on how they multiply with the appropriate input activations, and weights are assessed independently for just about every product output due to the fact this pruning is done on an output-by-output foundation.

Wanda works very well without needing to be retrained or get its weights up to date, and the lowered LLM has been utilized to inference immediately. The analyze found that a tiny portion of LLMs’ hidden condition options has unusually massive magnitudes, which is a peculiar attribute of these models. Building on this getting, the group learned that incorporating input activations to the standard excess weight magnitude pruning metric will make assessing weight worth shockingly accurate.

The most profitable open up-sourced LLM spouse and children, LLaMA, has been utilized by the crew to empirically appraise Wanda. The results demonstrated that Wanda could efficiently recognize economical sparse networks directly from pretrained LLMs without the need of the need for retraining or body weight updates. It outperformed magnitude pruning by a considerable margin when necessitating lessen computational cost and also matched or surpassed the performance of SparseGPT, a recently proposed LLM pruning approach that will work properly on significant GPT-loved ones models.

In conclusion, Wanda looks like a promising strategy for addressing the issues of pruning LLMs and features a baseline for long term investigate in this area by encouraging further exploration into comprehending sparsity in LLMs. By bettering the efficiency and accessibility of LLMs by pruning methods, improvement in the subject of Natural Language Processing can be continued, and these strong types can grow to be additional useful and greatly relevant.

Examine out the Paper and Github Link. All Credit history For This Investigate Goes To the Scientists on This Undertaking. Also, don’t ignore to join our 25k+ ML SubReddit, Discord Channel, and E-mail Publication, wherever we share the latest AI investigate information, neat AI assignments, and more. If you have any concerns concerning the earlier mentioned report or if we skipped everything, truly feel free to electronic mail us at [email protected]

🚀 Verify Out 100’s AI Tools in AI Equipment Club

Tanya Malhotra is a last yr undergrad from the University of Petroleum & Electrical power Research, Dehradun, pursuing BTech in Personal computer Science Engineering with a specialization in Synthetic Intelligence and Equipment Understanding.

She is a Facts Science enthusiast with good analytical and important thinking, alongside with an ardent desire in obtaining new competencies, leading teams, and managing function in an arranged way.

[ad_2]

Source hyperlink