[ad_1]

Accelerated computing — a capacity after confined to large-general performance computer systems in govt study labs — has gone mainstream.

Financial institutions, auto makers, factories, hospitals, shops and other folks are adopting AI supercomputers to tackle the escalating mountains of knowledge they want to approach and have an understanding of.

These impressive, successful systems are superhighways of computing. They carry facts and calculations around parallel paths on a lightning journey to actionable benefits.

GPU and CPU processors are the means alongside the way, and their onramps are rapidly interconnects. The gold standard in interconnects for accelerated computing is NVLink.

So, What Is NVLink?

NVLink is a higher-pace connection for GPUs and CPUs fashioned by a strong computer software protocol, usually riding on multiple pairs of wires printed on a laptop or computer board. It allows processors ship and obtain info from shared pools of memory at lightning pace.

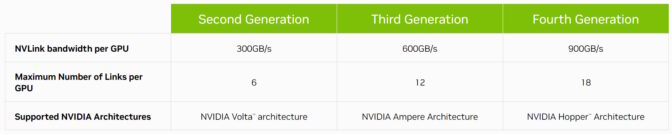

Now in its fourth technology, NVLink connects host and accelerated processors at prices up to 900 gigabytes for each next (GB/s).

Which is much more than 7x the bandwidth of PCIe Gen 5, the interconnect employed in common x86 servers. And NVLink sports 5x the strength effectiveness of PCIe Gen 5, many thanks to information transfers that eat just 1.3 picojoules for every bit.

The Historical past of NVLink

To start with introduced as a GPU interconnect with the NVIDIA P100 GPU, NVLink has advanced in lockstep with every single new NVIDIA GPU architecture.

In 2018, NVLink hit the highlight in higher general performance computing when it debuted connecting GPUs and CPUs in two of the world’s most highly effective supercomputers, Summit and Sierra.

The systems, installed at Oak Ridge and Lawrence Livermore Nationwide Laboratories, are pushing the boundaries of science in fields such as drug discovery, normal catastrophe prediction and additional.

Bandwidth Doubles, Then Grows Again

In 2020, the third-era NVLink doubled its max bandwidth for every GPU to 600GB/s, packing a dozen interconnects in every single NVIDIA A100 Tensor Core GPU.

The A100 powers AI supercomputers in organization knowledge centers, cloud computing companies and HPC labs throughout the globe.

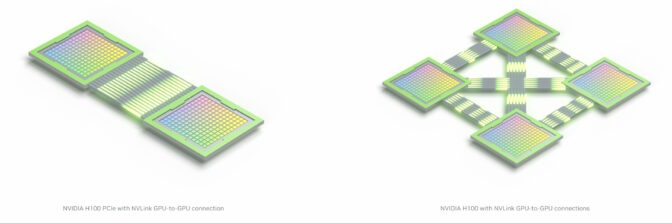

Currently, 18 fourth-era NVLink interconnects are embedded in a single NVIDIA H100 Tensor Core GPU. And the technological innovation has taken on a new, strategic function that will permit the most state-of-the-art CPUs and accelerators on the world.

A Chip-to-Chip Backlink

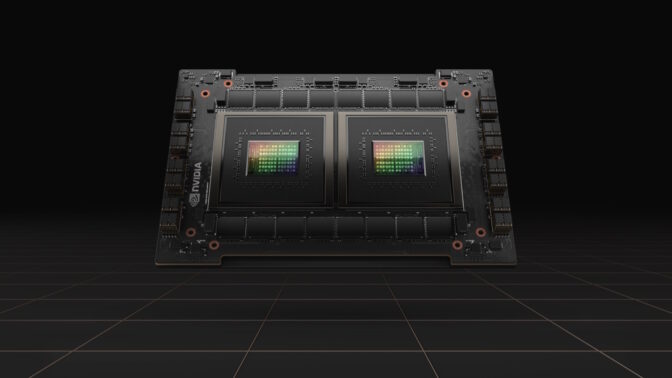

NVIDIA NVLink-C2C is a variation of the board-level interconnect to sign up for two processors inside a single deal, producing a superchip. For example, it connects two CPU chips to provide 144 Arm Neoverse V2 cores in the NVIDIA Grace CPU Superchip, a processor constructed to produce vitality-productive performance for cloud, organization and HPC consumers.

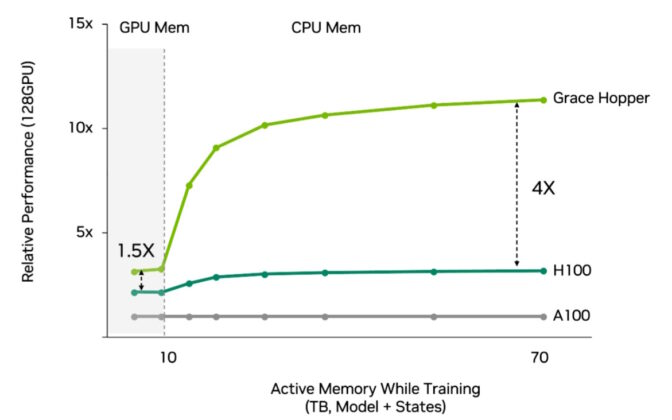

NVIDIA NVLink-C2C also joins a Grace CPU and a Hopper GPU to make the Grace Hopper Superchip. It packs accelerated computing for the world’s hardest HPC and AI work opportunities into a solitary chip.

Alps, an AI supercomputer planned for the Swiss Countrywide Computing Heart, will be amid the initial to use Grace Hopper. When it comes on the internet later this 12 months, the large-efficiency method will do the job on huge science troubles in fields from astrophysics to quantum chemistry.

Grace and Grace Hopper are also great for bringing vitality efficiency to demanding cloud computing workloads.

For instance, Grace Hopper is an suitable processor for recommender devices. These economic engines of the internet require speedy, effective entry to loads of details to provide trillions of results to billions of consumers daily.

In addition, NVLink is applied in a strong system-on-chip for automakers that consists of NVIDIA Hopper, Grace and Ada Lovelace processors. NVIDIA Drive Thor is a vehicle laptop that unifies smart capabilities this kind of as electronic instrument cluster, infotainment, automatic driving, parking and additional into a solitary architecture.

LEGO Inbound links of Computing

NVLink also acts like the socket stamped into a LEGO piece. It is the basis for creating supersystems to tackle the largest HPC and AI employment.

For instance, NVLinks on all 8 GPUs in an NVIDIA DGX procedure share speedy, immediate connections via NVSwitch chips. Jointly, they allow an NVLink community the place each and every GPU in the server is portion of a single method.

To get even more overall performance, DGX methods can them selves be stacked into modular models of 32 servers, building a powerful, productive computing cluster.

Users can join a modular block of 32 DGX devices into a single AI supercomputer using a blend of an NVLink community inside the DGX and NVIDIA Quantum-2 switched Infiniband material involving them. For instance, an NVIDIA DGX H100 SuperPOD packs 256 H100 GPUs to provide up to an exaflop of peak AI performance.

To get even a lot more general performance, people can faucet into the AI supercomputers in the cloud these kinds of as the one Microsoft Azure is developing with tens of thousands of A100 and H100 GPUs. It is a provider applied by teams like OpenAI to coach some of the world’s most significant generative AI designs.

And it’s just one much more illustration of the ability of accelerated computing.

[ad_2]

Source url