[ad_1]

Brokers cooperate superior by speaking and negotiating, and sanctioning damaged promises helps retain them genuine

Thriving interaction and cooperation have been very important for encouraging societies advance all over history. The closed environments of board games can serve as a sandbox for modelling and investigating interaction and conversation – and we can discover a large amount from playing them. In our modern paper, revealed currently in Mother nature Communications, we present how synthetic brokers can use interaction to greater cooperate in the board recreation Diplomacy, a lively area in artificial intelligence (AI) exploration, regarded for its concentration on alliance making.

Diplomacy is difficult as it has simple guidelines but superior emergent complexity thanks to the solid interdependencies between players and its immense action room. To help fix this problem, we made negotiation algorithms that allow for brokers to converse and concur on joint ideas, enabling them to defeat brokers missing this capacity.

Cooperation is significantly difficult when we can not rely on our peers to do what they assure. We use Diplomacy as a sandbox to check out what comes about when agents may possibly deviate from their earlier agreements. Our study illustrates the challenges that arise when intricate brokers are capable to misrepresent their intentions or mislead some others regarding their long term designs, which qualified prospects to a further significant concern: What are the ailments that boost reputable conversation and teamwork?

We clearly show that the approach of sanctioning peers who break contracts significantly lessens the benefits they can get by abandoning their commitments, thus fostering more trustworthy interaction.

What is Diplomacy and why is it critical?

Video games these kinds of as chess, poker, Go, and lots of online video online games have often been fertile floor for AI investigate. Diplomacy is a 7-player game of negotiation and alliance formation, performed on an outdated map of Europe partitioned into provinces, the place every single player controls several units (policies of Diplomacy). In the common variation of the sport, called Push Diplomacy, every transform features a negotiation period, right after which all gamers expose their chosen moves simultaneously.

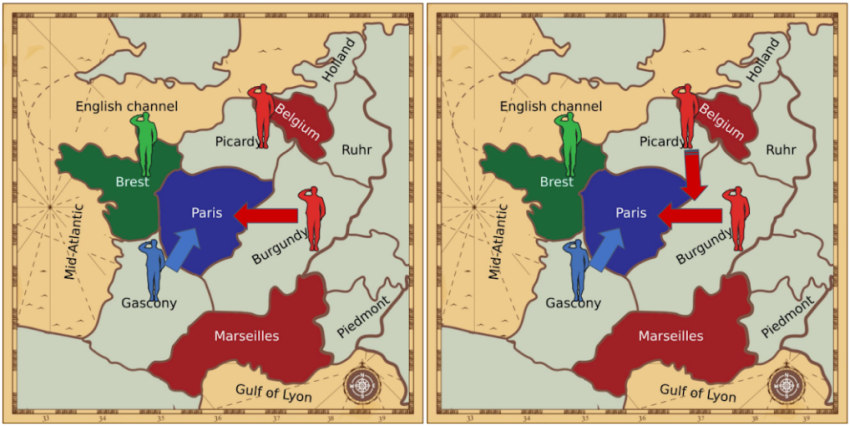

The coronary heart of Diplomacy is the negotiation period, exactly where players try out to agree on their future moves. For case in point, a person device might assist another device, enabling it to prevail over resistance by other models, as illustrated listed here:

Still left: two units (a Crimson unit in Burgundy and a Blue device in Gascony) try to transfer into Paris. As the units have equivalent energy, neither succeeds.

Ideal: the Crimson device in Picardy supports the Red unit in Burgundy, overpowering Blue’s device and letting the Red device into Burgundy.

Computational strategies to Diplomacy have been investigated given that the 1980s, many of which were explored on a simpler edition of the activity named No-Press Diplomacy, the place strategic conversation concerning players is not allowed. Researchers have also proposed personal computer-helpful negotiation protocols, in some cases identified as “Restricted-Press”.

What did we review?

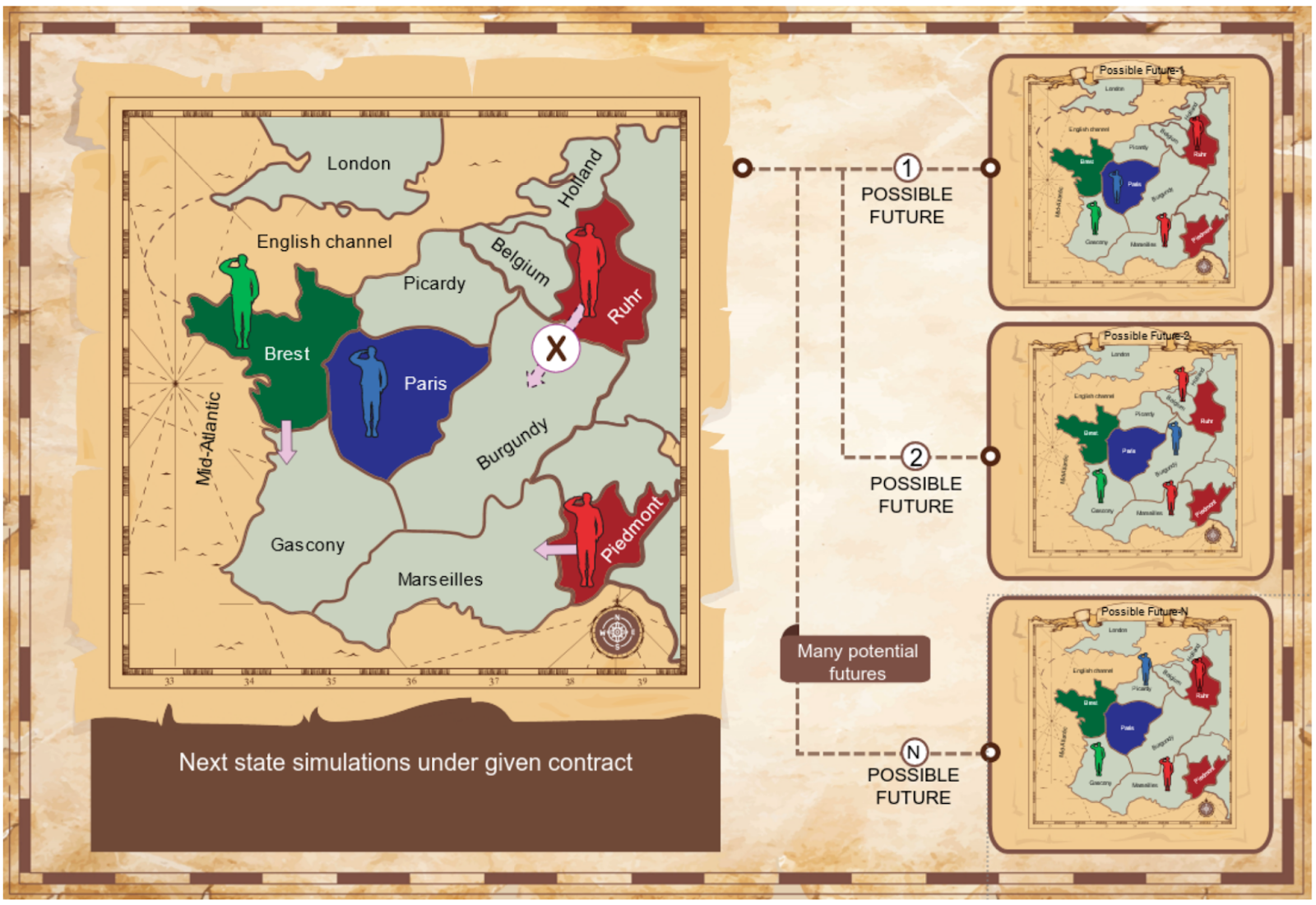

We use Diplomacy as an analog to serious-planet negotiation, furnishing strategies for AI brokers to coordinate their moves. We consider our non-speaking Diplomacy brokers and increase them to play Diplomacy with conversation by giving them a protocol for negotiating contracts for a joint program of motion. We simply call these augmented agents Baseline Negotiators, and they are bound by their agreements.

Left: a restriction permitting only selected actions to be taken by the Pink participant (they are not authorized to shift from Ruhr to Burgundy, and ought to go from Piedmont to Marseilles).

Right: A deal involving the Red and Eco-friendly players, which locations limitations on equally sides.

We consider two protocols: the Mutual Proposal Protocol and the Suggest-Pick Protocol, discussed in element in the comprehensive paper. Our agents implement algorithms that determine mutually useful offers by simulating how the match could unfold underneath various contracts. We use the Nash Bargaining Resolution from recreation theory as a principled foundation for pinpointing substantial-excellent agreements. The sport may unfold in several techniques relying on the actions of players, so our brokers use Monte-Carlo simulations to see what may well materialize in the next transform.

Our experiments demonstrate that our negotiation system lets Baseline Negotiators to appreciably outperform baseline non-communicating agents.

Brokers breaking agreements

In Diplomacy, agreements built all through negotiation are not binding (communication is “low-cost talk’‘). But what comes about when brokers who agree to a agreement in one switch deviate from it the next? In quite a few true-lifetime options individuals agree to act in a sure way, but fall short to fulfill their commitments later on on. To permit cooperation among AI agents, or involving agents and people, we will have to take a look at the possible pitfall of agents strategically breaking their agreements, and techniques to remedy this problem. We employed Diplomacy to study how the potential to abandon our commitments erodes have confidence in and cooperation, and recognize ailments that foster truthful cooperation.

So we look at Deviator Brokers, which triumph over trustworthy Baseline Negotiators by deviating from agreed contracts. Simple Deviators simply “forget” they agreed to a contract and transfer even so they would like. Conditional Deviators are extra sophisticated, and optimise their steps assuming that other gamers who approved a agreement will act in accordance with it.

We show that Very simple and Conditional Deviators drastically outperform Baseline Negotiators, the Conditional Deviators overwhelmingly so.

Encouraging agents to be sincere

Future we deal with the deviation trouble making use of Defensive Brokers, which react adversely to deviations. We look into Binary Negotiators, who basically cut off communications with brokers who break an arrangement with them. But shunning is a gentle response, so we also build Sanctioning Agents, who really don’t get betrayal flippantly, but alternatively modify their goals to actively endeavor to lessen the deviator’s worth – an opponent with a grudge! We show that the two varieties of Defensive Brokers cut down the advantage of deviation, especially Sanctioning Brokers.

Lastly, we introduce Figured out Deviators, who adapt and optimise their conduct in opposition to Sanctioning Agents more than many game titles, hoping to render the above defences less successful. A Uncovered Deviator will only break a deal when the speedy gains from deviation are superior ample and the skill of the other agent to retaliate is lower enough. In follow, Learned Deviators from time to time break contracts late in the recreation, and in accomplishing so achieve a slight advantage over Sanctioning Brokers. Even so, these sanctions travel the Figured out Deviator to honour a lot more than 99.7% of its contracts.

We also look at possible studying dynamics of sanctioning and deviation: what transpires when Sanctioning Agents may perhaps also deviate from contracts, and the probable incentive to stop sanctioning when this conduct is pricey. This sort of problems can steadily erode cooperation, so added mechanisms these as repeating interaction throughout a number of games or using a believe in and status units might be essential.

Our paper leaves a lot of thoughts open for future investigation: Is it possible to style and design far more subtle protocols to inspire even a lot more straightforward conduct? How could a person manage combining interaction techniques and imperfect information? At last, what other mechanisms could deter the breaking of agreements? Creating good, clear and honest AI techniques is an very important topic, and it is a essential section of DeepMind’s mission. Studying these issues in sandboxes like Diplomacy will help us to far better have an understanding of tensions amongst cooperation and competitors that could exist in the real planet. In the long run, we believe that tackling these difficulties permits us to improved fully grasp how to create AI techniques in line with society’s values and priorities.

Go through our comprehensive paper below.

[ad_2]

Resource connection