[ad_1]

Inside of the folder src, you can dive into the files utilised to crank out the plots that will be offered in Segment 5 (prediction_plots.jl), process the stock charges before the model instruction (details_preprocessing.jl), train and make the GRU network (scratch_gru.jl), and combine all of the over information at after (principal.jl). In this part, we will delve into the 4 features that compose the heart of the GRU network architecture and are the types made use of to put into practice ahead pass and backpropagation all through the training.

4.1. gru_cell_ahead functionality

The code snippet offered under corresponds to thegru_mobile_forward operate. This function gets the present input (x), the preceding concealed point out (prev_h), and a dictionary of parameters as inputs (parameters). With the previously mentioned parameters, this purpose permits just one phase of GRU cell forward propagation and computes the update gate (z), the reset gate (r), the new memory cell or applicant concealed state (h_tilde), and the following hidden point out (subsequent_h), making use of the sigmoid and tanh capabilities. It also computes the prediction of the GRU mobile (y_pred). Inside of this perform, the equations presented in Figures 3 and 4 are carried out.

4.2. gru_ahead operate

Compared with gru_cell_ahead, gru_ahead performs the forward go of the GRU network, i.e., the ahead propagation for a sequence of time techniques. This function receives the input tensor (x), the first concealed state (ho), and a dictionary as enter (parameters).

If you are new to sequential types, never confuse a time action with the iterations so that the model mistake can be minimized.

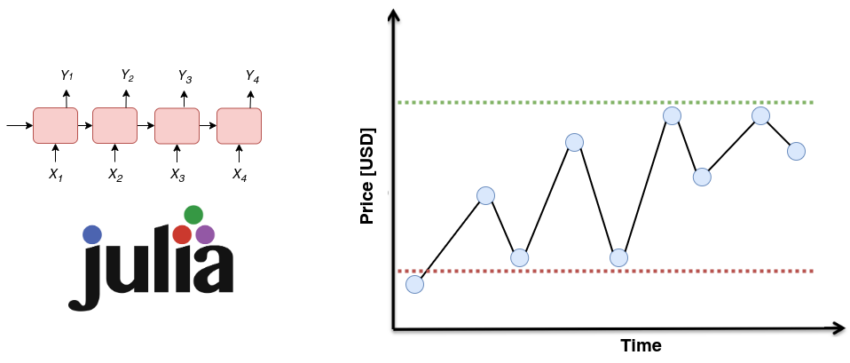

Don’t confuse the x that gru_cell_forward receives with the a person that gru_forward gets. In gru_ahead, x has three dimenions in its place of two. The third dimension corresponds to the full GRU cells that the RNN layer has. In shorter, gru_mobile_ahead is linked to Determine 2, although gru_forward is connected to Determine 1.

gru_ahead iterates around every time step in the sequence, contacting the gru_mobile_ahead functionality to compute subsequent_h and y_pred. It outlets the effects in h and y, respectively.

4.3. gru_mobile_backward operate

gru_mobile_backward performs the backward move for a solitary GRU cell. gru_mobile_ahead receives the gradient of the concealed point out (dh) as enter, accompanied by the cache that includes the things expected to determine the derivatives in Figure 4 (i.e., following_h, prev_h, z, r, h_tilde, x, and parameters). In this way, gru_cell_backward computes the gradients for the weights matrices (i.e., Wz, Wr, and Wh) and the biases (i.e., bz, br, and bh). All the gradients are stored in a Julia dictionary (gradients).

4.4. gru_backward function

gru_backward performs the backpropagation for the complete GRU community, i.e., for the comprehensive sequence of time actions. This purpose receives the gradient of the concealed condition tensor (dh) and the caches. Not like in the circumstance of gru_cell_backward, dh for gru_backward has a 3rd dimension corresponding to the whole number of time measures in the sequence or GRU cells in the GRU network layer. This operate iterates about the time steps in reverse purchase, contacting gru_cell_backward to compute the gradients for just about every time move, accumulating them across the loop.

It is vital to note at this stage that this undertaking just works by using gradient descent to update the GRU network parameters and does not consist of any performance that has an effect on the studying fee or introduces momentum. In addition, the implementation was made with a regression concern in thoughts. However, due to the modularization carried out, just very little variations are required to obtain a diverse conduct.

[ad_2]

Source url