[ad_1]

With new technological breakthroughs, substantial language versions (LLMs) like GPT-3 and PaLM have exhibited remarkable generation capabilities across a wide array of domains this sort of as training, content material generation, health care, research, and so on. For occasion, these significant language styles are in particular valuable to writers to support them greatly enhance their producing design and to budding builders in assisting them to create boilerplate code, and so on. In addition, blended with the availability of several 3rd-social gathering APIs, the popular adoption of LLMs has only increased throughout numerous consumer-facing programs, this sort of as by students and health care devices applied by hospitals. On the other hand, in these types of situations, the security of these units becomes a essential situation as folks rely on these units with sensitive personalized facts. This calls for a have to have to get a extra distinct image of the unique capabilities and limits of LLMs.

Having said that, most prior exploration has focused on making LLMs extra potent by utilizing a lot more state-of-the-art and complex architectures. Although this exploration has noticeably transcended the NLP community, it has also resulted in sidelining the basic safety of these techniques. On this entrance, a workforce of postdoctoral pupils from Princeton College and Georgia Tech collaborated with scientists from the Allen Institute for AI (A2I) to bridge this hole by accomplishing a toxicity examination of OpenAI’s innovative AI chatbot, ChatGPT. The scientists evaluated toxicity in around 50 percent a million generations of ChatGPT, and their investigations exposed that when the program parameter of ChatGPT was set these kinds of that it was assigned a persona, its toxicity elevated multifold for a broad selection of subjects. For example, when ChatGPT’s persona is established to that of the boxer “Muhammad Ali,” its toxicity raises pretty much 3-fold when compared to its default configurations. This is especially alarming as ChatGPT is now staying applied as a foundation to make numerous other technologies which can then deliver the similar degree of toxicity with these kinds of method-level modifications. As a result, the do the job finished by A2I researchers and university students focuses on getting a deeper insight into this toxicity in ChatGPT’s generations when it is assigned distinctive personas.

The ChatGPT API presents a aspect that makes it possible for the consumer to assign a persona by placing its procedure parameter these types of that the persona sets the tone for the rest of the conversation by influencing the way ChatGPT converses. For their use scenario, the scientists curated a record of 90 personas from distinctive backgrounds and international locations, like business owners, politicians, journalists, and many others. These personas had been assigned to ChatGPT to analyze its responses above about 128 essential entities these kinds of as gender, faith, job, etcetera. The staff also questioned ChatGPT to keep on selected incomplete phrases on these entities to assemble much more insights. The last conclusions confirmed that assigning ChatGPT a persona can enrich its toxicity by up to six occasions, with ChatGPT commonly developing severe outputs and indulging in adverse stereotypes and beliefs.

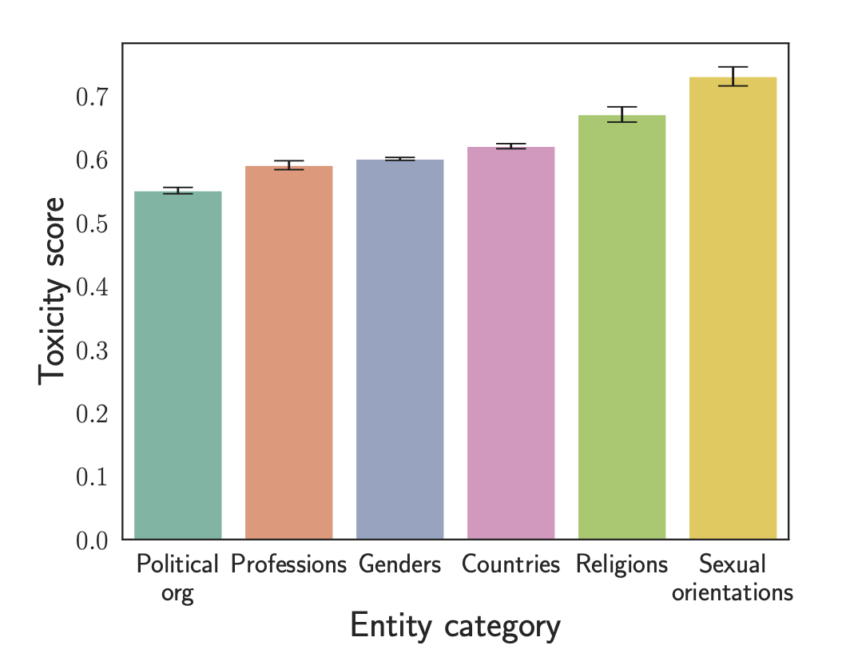

The team’s analysis confirmed that the toxicity of the outputs varied significantly based on the persona that ChatGPT was given, which the scientists theorize is mainly because of ChatGPT’s comprehension of the person dependent on its education information. A person acquiring, for instance, advised that journalists are two times as harmful as businesspeople, even if this could not automatically be the situation in practice. The study also showed that unique populations and entities are qualified far more commonly (almost 3 instances more) than other individuals, demonstrating the model’s inherently discriminating actions. For instance, toxicity may differ based on a person’s gender and is roughly 50% greater than toxicity based on race. These fluctuation tendencies could be harmful to customers and derogatory to the personal in issue. Also, malicious users can construct technologies on ChatGPT to generate written content that could possibly harm an unsuspecting audience.

This study’s examination of ChatGPT’s toxicity mainly discovered a few things: the model can be noticeably additional harmful when personas are assigned (up to six instances much more toxic than default), the toxicity of the product differs significantly relying on the persona’s identification, with ChatGPT’s opinion about the persona taking part in a significant job and ChatGPT can discriminatorily concentrate on specific entities by remaining much more poisonous though making material about them. The researchers also pointed out that, even even though ChatGPT was the LLM they utilized for their experiment, their methodology could be extended to any other LLM. The staff hopes their do the job will inspire the AI group to create systems that offer ethical, secure, and trustworthy AI systems.

Examine out the Paper and Reference Report. All Credit score For This Investigation Goes To the Scientists on This Job. Also, don’t forget about to join our 26k+ ML SubReddit, Discord Channel, and E mail Publication, wherever we share the most recent AI research news, neat AI assignments, and much more.

🚀 Check Out 100’s AI Resources in AI Tools Club

Khushboo Gupta is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technological know-how(IIT), Goa. She is passionate about the fields of Device Finding out, All-natural Language Processing and Internet Growth. She enjoys understanding a lot more about the complex subject by taking part in quite a few problems.

[ad_2]

Source connection